Replacing Google Analytics with just GoAccess

By my estimates, approximately two thirds of the pageviews on my blog do not register on Google Analytics. Given the type of content I publish, it makes sense that most users run adblock.

I wanted to get more accurate numbers for:

- Pageviews over time

- Sources (referrers in particular)

- Location of visitors

These are probably the most relevant for any personal blog.

Because I only cared about these numbers, I realized that I didn’t need 99% of the features in GA. That very much simplified the requirements when I was looking for alternatives.

I also looked for the simplest tool possible. I didn’t want to manage dependencies or have to roll another server.

And GoAccess satisfies all of the above. It works by parsing logs, so no other dependencies are required. It correctly parses nginx logs with no additional setup. It shows visits over time and has information about referrers. It also supports GeoIP so it can show the location of visitors.

It also has the added benefit of not sending data anywhere, resulting in better performance and privacy.

Install GoAccess

The Get Started page has installation instructions. I suggest building it from source because the version in PPA is outdated. It’s quite straight-forward: typical wget, tar, configure, make, make install.

I installed it on the server, but you could also install it locally. However, if you install it locally, you’ll have to periodically copy the logs or pipe them.

Missing development files for libmaxminddb library

If you get that error, you should install the libmaxminddb-dev package. On Ubuntu and Debian I installed it from apt.

Run GoAccess

I experimented with the options and this is what I ended up with:

goaccess /var/log/nginx/ognjen.io.access.log \

--ignore-crawlers \ # remove most bots from the report

\ # This switch is very important, and in the web

\ # output below you can see that out of a 1000

\ # requests only 168 are legitimate

--log-format=COMBINED \ # nginx and Apache logs are parsed

\ # using the COMBINED format

--no-query-string \ # my blog doesn't use query strings so

\ # parsing can be sped up by ignoring it

--ignore-panel=NOT_FOUND \ # I don't need to see 404s,

\ # 404s are mostly bots probing anyways

--ignore-panel=HOSTS \ # I don't care about IPs

--ignore-panel=OS \ # Or the users' OS

--ignore-panel=BROWSERS \ # Or browsers

--ignore-panel=KEYPHRASES \ # Or Google terms (that is empty anyways)

--ignore-panel=STATUS_CODES \ # Or other status codes

--ignore-panel=REQUESTS_STATIC \ # Or static files

--geoip-database=/home/regac/geolite/...mmdb \

\ # If you have downloaded the GeoLite city database,

\ # you can see where the users are from.

--persist \ # Store the parsed logs into a database

\ # for quicker retrieval next time

--db-path=/home/regac/goaccess-db \

\ # Default location is /tmp,

\ # I wanted to make sure I didn't overwrite

\ # it if running it on other logs

--restore \ # Retrieve from the database

\ # persist saves new entries

\ # and restore retrieves everything stored)

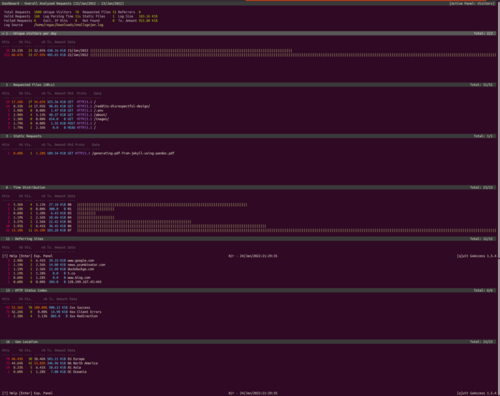

That produces terminal output that looks like this:

Click for higher res.

Other output formats

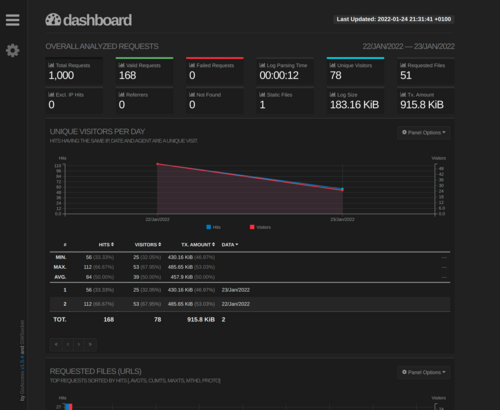

There are several output formats, including a very presentable html version that is generated with the -o report.html switch. It looks like this:

Click for higher res.

The result is a self-contained html file that includes the data making it easy to host.

Managing logs

If you use --persist, you can remove old logs after they’re ingested.

If you rotate your logs, you can add this to the crontab to run after the logs are rotated:

goaccess /var/log/nginx/ognjen.io.access.log.1 \

--persist \

--db-path=/home/regac/goaccess-db

This command only parses and adds the hits to the GoAccess database. This saves you a step of having to untar older logs.

Limitations

You lose your previously collected data

Whenever you switch to a new analytics platform, you lose the data previously collected.

But this is also affected by how often you prune your logs. Luckily, I set up logrotate to keep logs for a year, meaning I didn’t lose much data.

Filtering

At the moment, the only way to filter the results is to use awk, sed, or grep on the logs, which means you must keep them. That’s not very convenient.

But this issue on the GoAccess repo tracks a new filtering feature. It will also support filtering persisted logs. It seems to be actively worked on and coming soon.

Remarketing

Remarketing, of course, does not work. For smaller and/or personal sites, it’s not a problem. But for a commercial product, it might be a deal-breaker.

Events

I considered using GoAccess on a side project as well. But the lack of events made it a no-go.

However, it occurred to me that I could make a dummy endpoint that would just respond with 200. Then, I could call that endpoint instead of sending events to Google Analytics. For example, /events/purchase. That would make it possible to recreate most of the events functionality from GA.